Your team uses AI. Now you can prove it's safe.

See what your team shares with ChatGPT, Claude, and Perplexity. Protect sensitive data before it leaves.

Your team uses AI every day. Here's what you're missing.

Last week, someone on your team probably pasted customer data into ChatGPT. Client contracts into Claude. Financial projections into Perplexity. Not because they're careless, but because there's no visibility into what goes in.

Paying for ChatGPT Team or Enterprise? Their admin console shows who has a login, not what data goes in. No sensitive data detection. No cross-platform visibility. A subscription isn't governance.

It comes down to three things

If your team uses AI, these are the fundamentals. Get them right and everything else follows.

Visibility

Which AI tools does your team use? How often? What kind of work goes in? You can't protect what you can't see, and most businesses have no idea what's actually happening.

Data protection

Stop sensitive information before it reaches AI platforms. Client names, financial data, credentials, personal details. Catch it at the point of entry, before it becomes a problem.

Evidence

Audit trails, usage reports, and documented controls that prove your protections work. Not a policy collecting dust. Living evidence from day-to-day operations.

The EU AI Act, ISO 42001, and Australia's Privacy Act all require exactly these three things. But even without a single regulation, this is just good business.

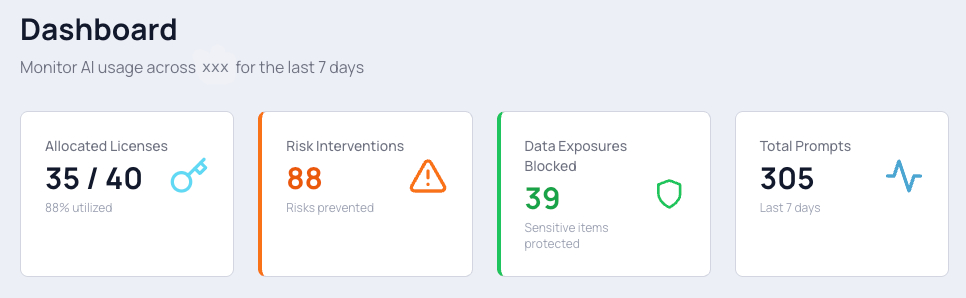

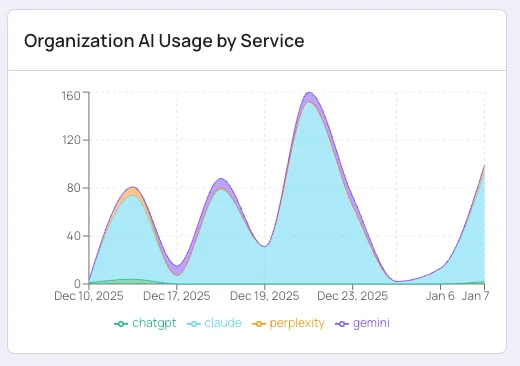

Here's what changes in the first week

Real data from a real team. This is what visibility looks like.

Find out where your team's AI data goes

Your marketing team used Claude 247 times last week. Engineering prefers ChatGPT. Legal hasn't touched AI at all. Finance is heavy on Perplexity for research. Now you know. Not guessing. Knowing.

Catch sensitive data before it reaches AI

100+ detection patterns catch SSNs, credit cards, API keys, client names, and project codes before they reach AI platforms. When someone's about to share something risky, they see a prompt. They can still proceed if they have a good reason. Human in the loop, always.

Answer the auditor's questions before they ask

Every AI interaction logged. Redacted prompts stored securely. When the auditor asks, you have evidence. When the regulator checks, you have proof. Supports GDPR Article 30 record-keeping requirements.

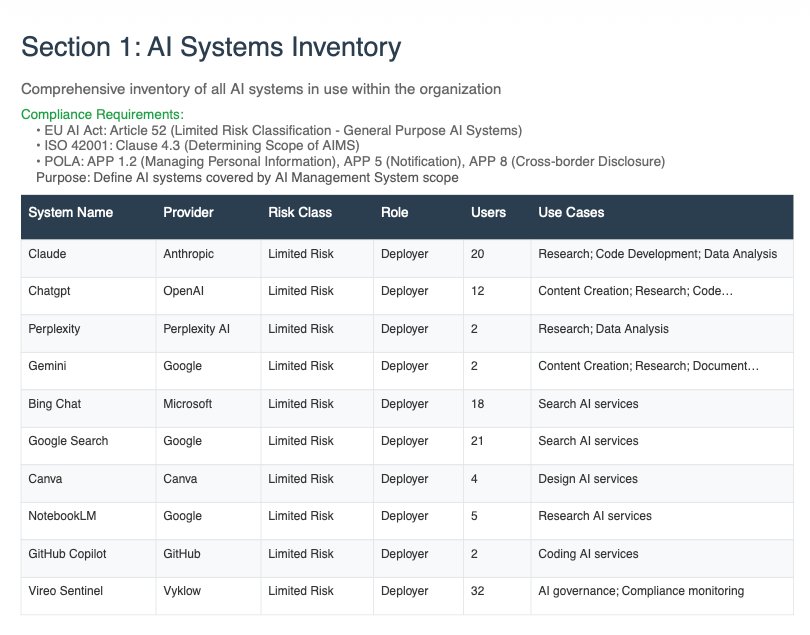

Hand your auditor a report, not an excuse

A full AI governance report covering your systems inventory, risk assessment, control effectiveness, and residual risk. Reports mapped to EU AI Act, ISO 42001, and Australian Privacy Act sections to support your compliance work. Hand it to an auditor, attach it to a client proposal, or present it to the board.

Download an example report

Built differently from the ground up

Most AI security tools use AI to monitor AI, process your data in the cloud, and take months to deploy. We took a different approach.

Your data never leaves the browser

Detection happens locally, in the browser extension, before anything reaches our servers. Sensitive data is redacted at the source. We see metadata, not your secrets.

Deterministic, not AI

We use pattern matching you can audit, not opaque ML models. Every detection rule is transparent and predictable. We don't use AI to protect against AI.

10 minutes to deploy, not 5 weeks

Browser extension, not enterprise infrastructure. No network changes, no proxy configuration, no IT tickets. Your team installs the extension and you have visibility the same day.

Traditional DLP wasn't built for AI

DLP watches network traffic and file movements. When someone types confidential information directly into ChatGPT, there's no file to scan.

Traditional DLP

- Monitors file transfers and network traffic

- Binary block or allow decisions

- Doesn't see typed content in browser

- Enterprise complexity, enterprise cost

- Months to deploy

Vireo Sentinel

- Monitors at the browser, before data leaves

- Cancel, redact, edit, or override with justification

- Catches typed content, pasted data, and file uploads

- Built for growing companies, not just enterprises

- Live in 10 minutes

Already have enterprise DLP? Vireo fills the AI-specific gap it wasn't designed to cover.

Built for how your industry works

Every industry handles different sensitive data. We've mapped Vireo to the specific risks and regulations that matter in yours.

Legal

Protect client privilege and case files from AI disclosure

Accounting

Keep tax file numbers and client financials out of AI prompts

Financial Advisers

Meet AFSL obligations when your team uses AI for research

Recruitment

Stop CVs and candidate data flowing into ChatGPT

Education

Protect student records while enabling AI-assisted teaching

Mining Services

Safeguard tender data, safety records, and client information

Up and running in 10 minutes

No IT expertise required. No complex integrations. No network changes.

Install the extension

Your team installs a lightweight browser extension. Chrome, Firefox, Edge, Brave. Under 2 minutes per person.

Work naturally, intervene when it matters

The extension monitors quietly in the background. When someone pastes sensitive data, uploads a file, or hits a risk threshold, it steps in before the data reaches AI.

See everything

Your dashboard shows complete visibility: which tools, which teams, which risks. Evidence, not assumptions.

Want to see it in action? Watch our setup videos

Transparent pricing. No sales calls.

Starts at $55/month for up to 5 users. Per-user cost drops as your team grows. 14-day free trial.

Built by people who understand governance

We've led enterprise technology and global operations. We know what boards and auditors actually need.

David Kelly

Co-Founder & Director25+ years leading global operations. Former Group Managing Director at Aquirian Limited (ASX: AQN). Led complex organisations across mining services and heavy industry. Knows what boards and auditors actually need to see.

LinkedInStephen Kelly

Co-Founder & DirectorAI, data, and automation expert. Senior architect roles at Dell Technologies and Hitachi Vantara. Led technology transformations for Rio Tinto, BHP, Woodside, and Goldman Sachs. Builds technology that amplifies human judgment.

LinkedInVireo Sentinel is built by Vyklow Analytics.

Common questions

This comes up a lot. Vireo shows interventions to users, not silent surveillance. When someone's about to share sensitive data, they see a prompt asking them to reconsider. They can still proceed if they have a good reason. Your team stays in control. Most companies find that once employees understand it's about protecting them (and the company) rather than watching them, the resistance disappears.

Risk detection happens in the browser before anything leaves your device. Sensitive data is redacted before it reaches our servers. We store metadata about AI interactions (who, when, which platform, risk score) but the actual prompt content is redacted. Your organisation's data is completely isolated from other customers.

About 10 minutes for the admin, under 2 minutes per employee. You create an account, set up your organisation, and invite your team. They install a browser extension. That's it. No network configuration, no proxy setup, no IT tickets. Works on Chrome, Firefox, Edge, and Brave.

Traditional DLP watches network traffic and file movements. It wasn't built for AI. When someone types confidential information directly into ChatGPT, most DLP tools don't see it because there's no file to scan. Vireo works at the browser level, catching data at the point of entry. If you have enterprise DLP, Vireo fills the AI-specific gap.

Get visibility in 10 minutes. Evidence from day one.

14-day free trial. Cancel anytime.